The Enterprise AI Management and Observability Platform

Elevate AI Impact with Strategic Management and Unmatched Visibility

KEY ADVANTAGES

Centralized Management of Prompts, LLMs, Agents, ML Models, Data and APIs

End to End AI Pipeline Management and Visibility

Performance and Cost Monitoring Alerts for Prompts, Agents and Models

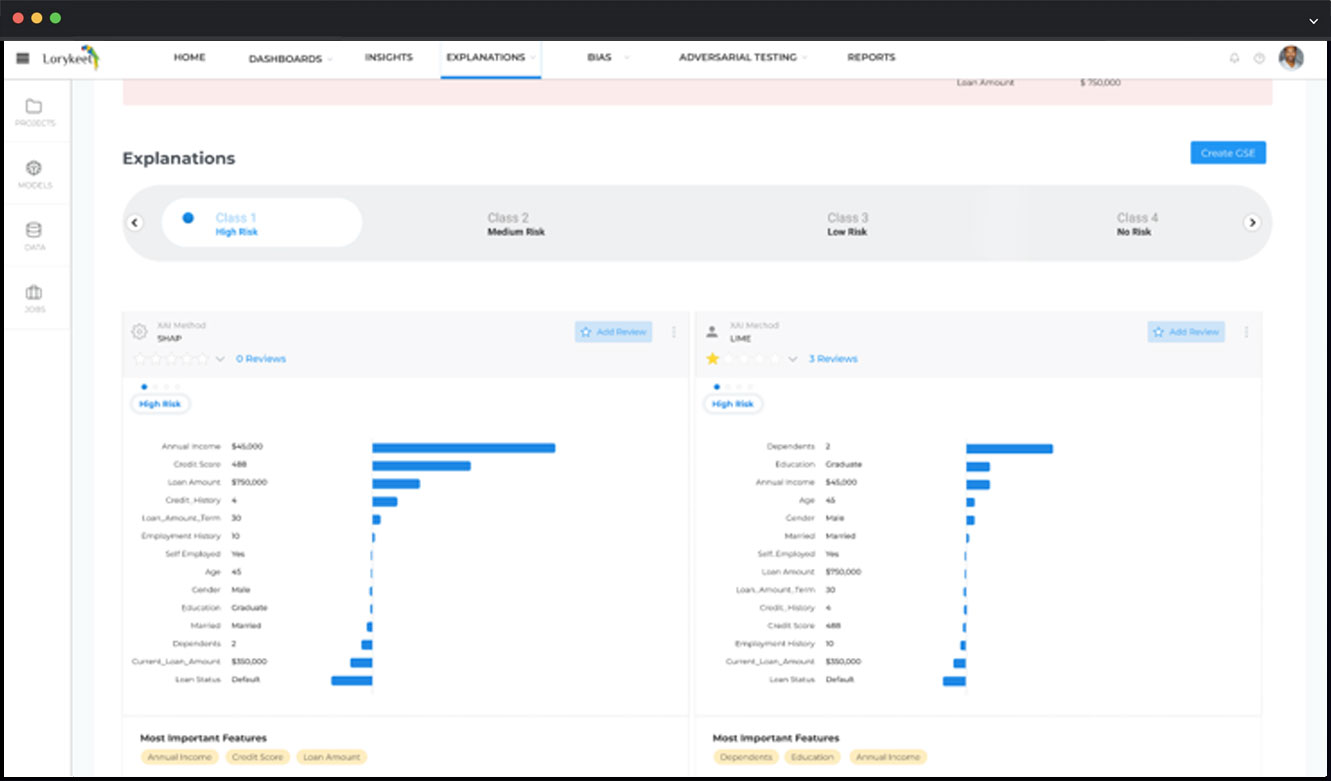

Explainable AI and "What if" Analysis to build trust in AI and accelerate adoption

Automated Testing and Evaluation

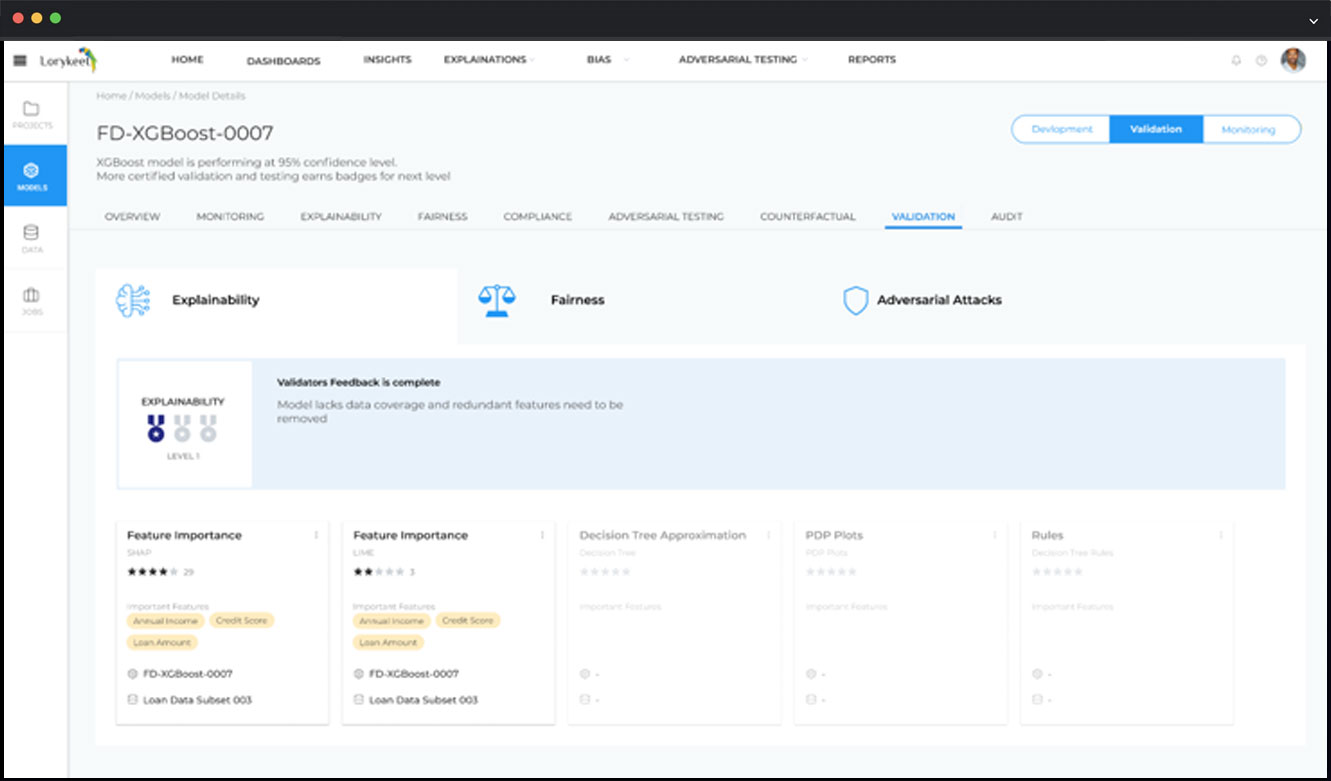

Humans in the loop for validation and oversight

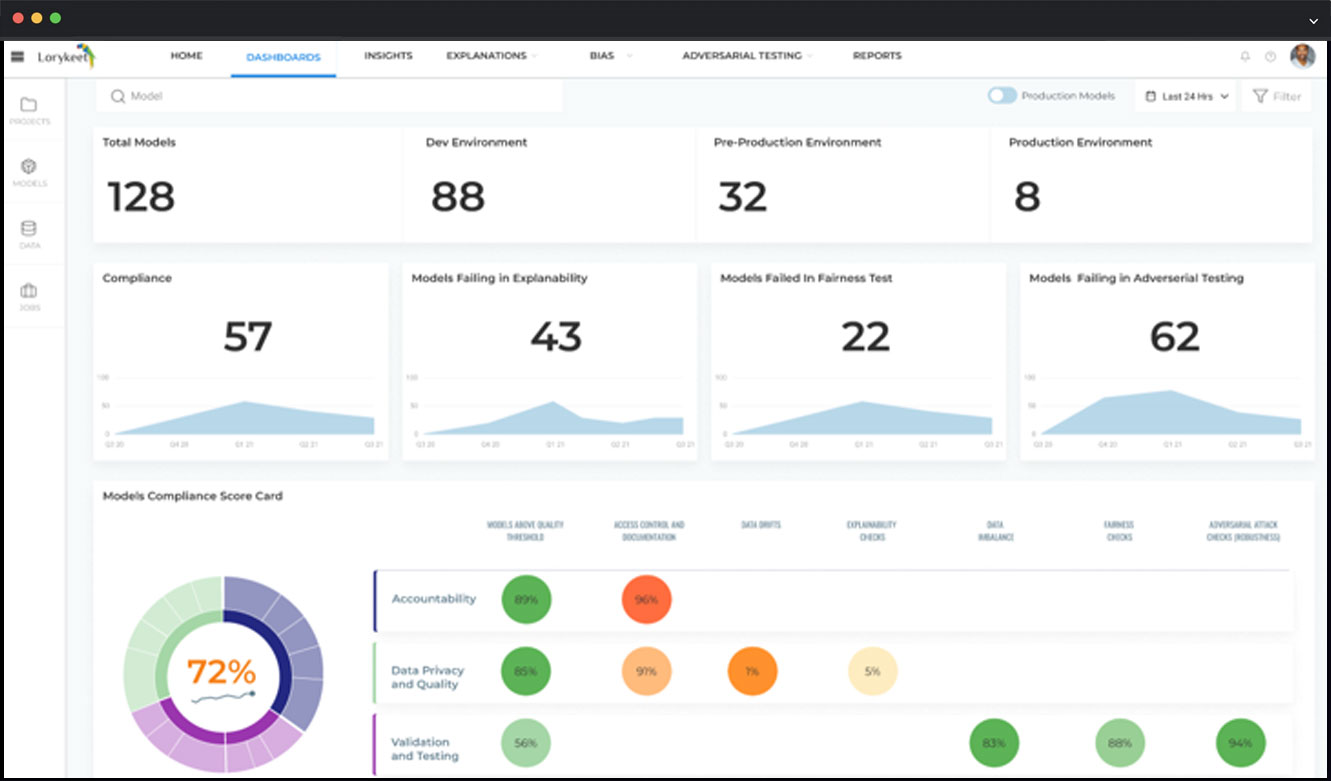

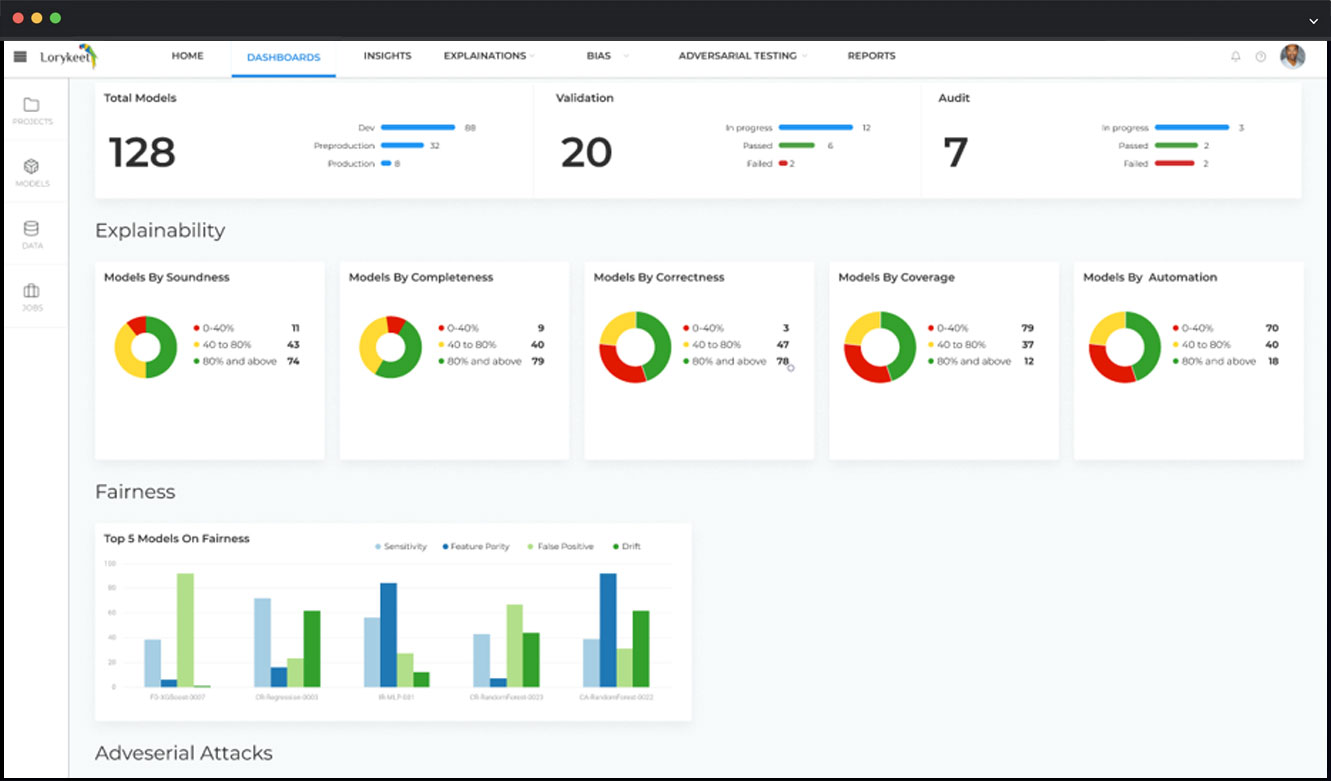

Our technology provides a unified, end-to-end solution for managing and optimizing the AI lifecycle

Prompts and Data Management

Model, Agent and API Management

Automated Testing and Evaluation

Observability and Monitoring

Power AI with Smarter Prompts and Organized Data

Structure and Organization Matters

Centralize ML Models + Agents + APIs

Comprehensive Management for Models, Agents, and APIs

Confidence in AI Starts with Reliable Evaluation

Responsible AI You Can Trust : Test, Evaluate, Improve

Ensure your AI models and agents perform with accuracy, reliability, and trust. Our platform enables automated evaluation, A/B testing, and real-time observability to assess performance, detect anomalies, and optimize outcomes. Compare models side by side, define custom test suites, and implement guardrails to prevent errors and biases. With detailed logging and performance monitoring, gain full visibility into model behavior. Continuously refine your AI for better decision-making, efficiency, and user satisfaction.

Total Visibility for Prompts, Models & AI Agents

Real-Time AI Insights for Reliable Performance

Gain real-time insights into your AI’s performance across prompts, models, and agents. Track key metrics like latency, accuracy, and system health while detecting anomalies and model drift before they impact results. With detailed logging, traceability, and automated alerts, quickly identify issues and optimize AI efficiency. Ensure your systems operate reliably and at scale with continuous monitoring, preventing failures and enhancing performance. Stay proactive with powerful real-time observability.

customer Testimonial

We care about our customers experience too

Karla Lynn

Sholl's Colonial Cafeteria

Tomas Campbell

Service technician